Computers

How computers work.

Learn

- #todo https://cpu.land

- #todo https://www.nand2tetris.org

- #todo https://en.wikipedia.org/wiki/Little_Computer_3

CPU

Central processing unit.

CPUs are dumb: they know nothing about the concept of a program. They just execute instructions fetched from memory in an infinite loop.

These instructions are binary data: a few bytes to represent the instruction itself (the opcode) followed by the instruction arguments. A series of such binary instructions is called machine code. Assembly language is a human-readable abstraction over it.

The instruction pointer holds the location of the next instruction in the memory. This pointer is stored in a register: a small storage bucket that is extremely fast to access.

Program execution starts with the OS loading a file with machine code into memory and instructing the CPU to move the instruction pointer to that location.

Modes (rings)

The mode of the CPU controls what it is allowed to do. Just like the instruction pointer, it is stored in a register.

- Kernel mode: any supported instruction is allowed, all memory is accessible.

- User mode: only a subset of instructions is allowed, memory access is limited.

Generally, the kernel and drivers run in kernel mode while applications run in user mode.

User programs can't be trusted to have full access to the computer, so they operate via the kernel's interface - system calls. Software (and hardware) can also send system interrupts: special signals for the CPU to pause the current execution in order to perform some action.

Multitasking

Even with a single CPU core, parallelism can be simulated by letting threads take turns: execute a few instructions from thread 1, switch to thread 2, repeat. As a result, both threads remain responsive and do not occupy the CPU.

Thread switching is implemented using timer chips: before jumping to program code, the OS instructs a timer chip to trigger an interrupt after a period of time. When the CPU receives the interrupt, it jumps to the OS code, which then saves the program state and switches to another thread. This is called preemptive multitasking.

The amount of time a thread is allowed to run before it is interrupted is called the time slice.

Architecture

#todo Add details.

- x86

- RISC-V

- aarch (ARM)

Cache

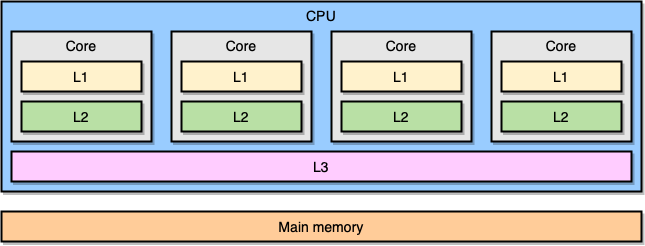

A hardware cache used to fetch data from the main memory faster. Each cache layer closer to the CPU is faster, but smaller. Most CPUs have 3 layers: L1, L2 (per core), and L3 (shared).

Data is fetched in blocks of fixed size (typically 64 bytes) called cache lines.

Compare-and-swap

Compare-and-swap (CAS) is an atomic instruction used to implement synchronization primitives such as mutexes and semaphores. It compares the old value with the current one, and if they are equal (meaning no other thread has updated the value), writes the new value.

Memory

Endianness

The order of bytes in memory.

- Big-endian (BE): most significant byte first (natural order, used in network protocols)

- Little-endian (LE): least significant byte last (reverse order, used in processor architectures)

Floating-point arithmetic

IEEE 754 is the standard for floating-point arithmetic.

In-memory representation is based on the scientific notation with 3 components: sign, exponent, and significand.

❓ Help

Why 0.1 + 0.2 = 0.30000000000000004? Because floating-point numbers are not stored exactly in memory. Just as 1/3 cannot be represented in finite decimal (base 10), numbers like 1/10 cannot be represented in binary (base 2). See https://floating-point-gui.de for more details.